A/B Testing & Iteration Solutions

A/B testing & Iteration

A/B testing involves presenting different versions of a webpage, feature, or interface element—such as layouts, buttons, colors, headlines, or forms—to separate groups of users. Version A represents the current design, while Version B introduces a change. User interactions are measured using predefined metrics such as click-through rates, conversion rates, task completion time, or error reduction. By analyzing these metrics, teams can identify which design better meets user needs and business goals. Iteration is the continuous process of improving a product based on testing results, analytics, and user feedback. Once a winning variation is identified, it is implemented and monitored. The process does not stop there—new hypotheses are formed, further tests are conducted, and designs are refined over time. This cycle of test, learn, and improve ensures that the product evolves with changing user expectations and usage patterns.

A/B testing and iterative design are central to building high-performing digital experiences. Instead of relying on opinions or assumptions, A/B testing compares two versions of a page or element to see which performs better with real users. Visitors are randomly split between a control (A) and a variation (B), and key metrics—such as conversion rate, click-through rate, or form submissions—are measured. This makes it possible to attribute performance differences to specific design or content changes.

A/B testing sits at the heart of conversion rate optimization (CRO). By systematically experimenting with headlines, CTAs, layouts, form lengths, or imagery, businesses can lift conversions without increasing traffic or advertising budgets. Case studies show that relatively small adjustments, such as clarifying CTA text or improving visual emphasis, can produce double- or triple-digit conversion improvements when aligned with user intent and context. This makes structured experimentation an essential part of any serious digital strategy.

Successful A/B testing begins with research-driven hypotheses. Analytics, heatmaps, user feedback, and usability tests reveal friction points—pages with high drop-off, CTAs that get ignored, or fields users struggle to complete. Teams then form hypotheses like “If we simplify the form and clarify the value near the CTA, more users will complete it” and design focused tests around these ideas. Testing one key change at a time makes results easier to interpret and apply.

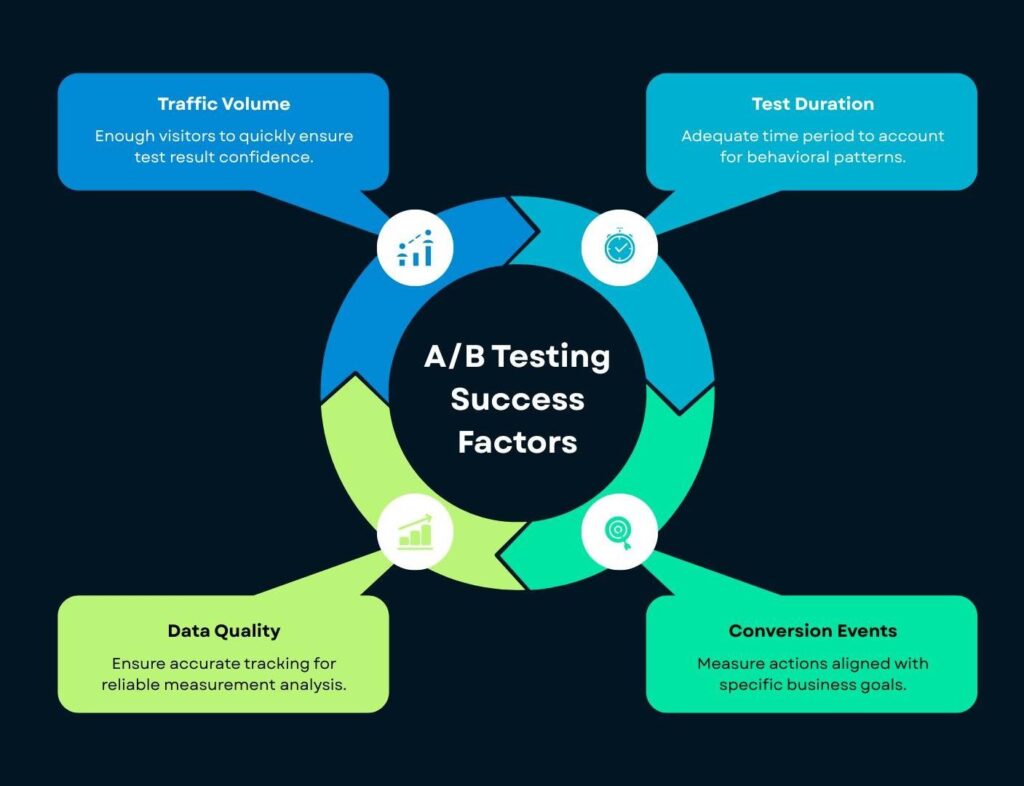

Running effective A/B tests also requires attention to statistical significance and test duration. Ending a test too early or with too little traffic can lead to misleading conclusions, where random fluctuations appear as real improvements. Best-practice guides recommend predefining goals, minimum sample sizes, and timeframes, then letting tests run long enough to account for weekday/weekend behavior and traffic variation. This discipline helps avoid “false wins” that fail to hold up in production.

Iteration is where A/B testing becomes truly powerful. Rather than treating each test as a one-off experiment, high-performing teams use results to refine designs continuously. A winning variation becomes the new control, forming the baseline for future tests. Over time, this compounding process leads to interfaces that are sharper, more intuitive, and better aligned with user expectations. It also reduces risk when introducing major changes, since new ideas can be validated on a subset of traffic before global rollout.

A/B testing increasingly intersects with performance and Core Web Vitals. Studies in 2024–2025 show clear links between load speed, interaction delay, visual stability, and conversion rates—sites that meet Core Web Vitals recommendations see significantly lower abandonment and higher conversions. Experiments that simplify pages, reduce heavy scripts, or streamline above-the-fold content often improve both UX and Core Web Vitals simultaneously, creating a dual benefit in user satisfaction and SEO.

Ultimately, A/B testing and iteration shift design from a one-time project to an ongoing optimization process. Instead of guessing what might work, teams observe what actually works for real users and scale those improvements. Over time, this data-driven cycle builds digital experiences that are not only visually polished but measurably better at turning visitors into leads, customers, or long-term users.